A3E 2025 Anaheim

A3E is excited to be back LIVE and at the NAMM Show 2025! A3E + ARAS will once again be providing their forward thinking educational programming examining the evolutionary process and the Future of Music, Audio + Entertainment Technology™.

A3E 2025 Anaheim will continue to explore the evolving intersection of music, technology, and creativity. This year’s program will emphasize two critical facets for artists and content creators:

1. Tactical understanding and mastery of day-to-day technologies, especially the rising influence of Artificial Intelligence (AI), from ideation and creation to IP protection, to fan engagement and new experiences, to monetization opportunities and strategies;

2. Strategic, gaining critical insights, understandings and perspectives on technologies that are and will be emerging, and that will present both amazing opportunities as well as profound challenges not only to their profession, but to their unique gift of creativity. A3E clearly sees that AI will continue to set its learning sights on, and will be applying its transformative computational powers to, human creativity and associated data sets (neurodata), this is what A3E calls Artificial Creativity (AC).

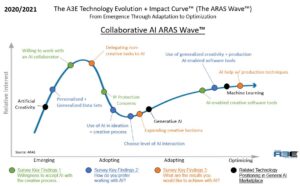

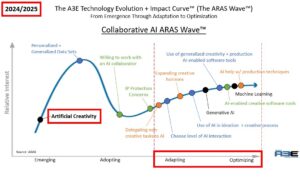

The two below graphs reinforce A3E’s mission to create the programs, topics and discussions that are needed for musicians, content creators and the entertainment industry as the evolve through and with the co-existence of AI and AC.

The LEFT graph shows the research efforts conducted by A3E and Sitarian Corporation where their communities of music technologists gave insights, based on the Y axis of Interest and the X axis (of emergence, adoption, adaptation, and optimization) – the “A3E Technology Evolution + Impact Curve™” (the “ARAS Wave™”), looking at AI and AC. This was done in 2020/2021.

We then analyzed and plotted (the RIGHT graph) where those same insights and associated data points are now and forecasted for 2025. Many aspects of the tactical, day-to-day, aspects of AI have accelerated to the point of adaptation and are being optimized into content creation (noted between the two vertical dashed red lines). AI in this case has become a valuable part of their profession and efforts.

The crucial and critical aspect of AC is still sitting dormant, low in interest on the ARAS Wave, and this is the profound new frontier that A3E will be injecting into the A3E 2025 Anaheim educational program, and going forward. AC powered by AI needs to discussed and planned for right now!

New Sessions, Speakers, Panelists, and Moderators are being added daily. Please check back for updates.

Friday, January 24th, 2025; 10:00AM – 11:00AM Pacific Time; Hilton Anaheim; Level 2, Hilton California Ballroom A

A3E & TEC Tracks Special Keynote Session: The Evolution of Content and the Industry, With Rick Beato and Tim Pierce

What implications do the latest technologies have on artistic expression? How will they impact the evolution of music in the digital age? Deep dive into this and so much more in a special A3E and TEC Tracks keynote, presented by globally recognized instrumentalists and educators Rick Beato and Tim Pierce. Hear their insights on the future of music, the rise of AI and what it all means. Don’t miss this exclusive look into where we are and where we’re going with the biggest thought leaders in music.

Please join this distinguished panel, along with NAMM, TEC Tracks and A3E for this crucial and fascinating keynote and industry discussion.

Rick Beato | YouTube Personality, Multi-Instrumentalist, Music Producer, Educator | Everything Music

Rick Beato is an American YouTube personality, multi-instrumentalist, music producer, and educator. Since the early 1980s, he has worked variously as a musician, songwriter, audio engineer, and record producer; he has also lectured on music at universities.

Beato owns and operates Black Dog Sound Studios in Stone Mountain, Georgia. He has produced for and worked in the studio with bands such as Needtobreathe, Parmalee, and Shinedown. On his YouTube channel, he covers different aspects of rock, jazz, blues, electronic, rap, and pop, and he conducts interviews with musicians and producers.

Friday, January 24th, 2025; 11:00AM – 1:00PM Pacific Time; Hilton Anaheim; 4th Floor, Hilton Huntington Room

Artificial Creativity (AC): the Intersection of Content Creation with AI, Neuroscience, and Human-Centric Design & Creativity: Part 1

As artificial intelligence technology delves deeper into neuroscience, it opens up new possibilities through neuroscience-driven insights into brain activity and physiological data. Content creators (as well as “other parties”) now have the opportunity to harness this neurodata to craft personalized and adaptive experiences like never before. In parallel, AI will inevitably use this neurodata, and even create its own “synthetic” neurodata, and this is what A3E deems as Artificial Creativity (AC). This evolution is transforming music, video, gaming, film, and social media content. From AI-generated music that adapts to the listener’s mood, and video content that responds to real-time emotional cues, to social media algorithms designed to maximize engagement using neurodata, and immersive games that react to players’ brainwaves—AI and AC are revolutionizing the future of content creation. The opportunities for innovation are vast, especially possibilities when applied to health-focused content that adjusts based on real-time stress or mood.

However, these advancements also present significant challenges, including concerns over data sets/data ownership, intellectual property, data privacy risks, potential manipulation, the risk of formulaic content, commercialization of human expression, and the devaluation of human creativity in favor of data-driven design. The ethical dilemmas content creators must navigate are profound. Join this A3E discussion to explore these possibilities and challenges, leaving you with critical new insights and questions about the future of content creation and entertainment technology.

J. Galen Buckwalter | CEO | psyML

J. Galen Buckwalter, PhD, has been a research psychologist for over 30 years. He began his career as a research professor at the University of Southern California. Subsequently, he initiated a behavioral outcomes research program at Kaiser Permanente, Southern California. He transitioned into industry when he created the assessment and matching system for eHarmony.com. He is currently CEO of psyML, using his psychological and social media expertise to design digital systems supportive of individual growth. Galen is also a participant/scientist in a trial of an implanted brain-machine interface geared toward the long-term restoration of spinal cord injury. As such he has unprecedented access to the neural functioning of his brain. As the front man for a four-piece pre-punk band of shrinks, Siggy, he has written over 70 songs and performed at most every underground dive in LA. He is currently working to integrate the sounds of his neuronal activity into his music.

Dan Furman, Ph.D. | CEO | Arctop

Dan Furman, PhD, began his neuroscience career as a researcher under neurosurgeon Dr. Christopher Duma, developing a surgical method that used brain imaging to predict the spread of malignant brain tumors and proactively halt tumor growth using gamma radiation. He went on to Harvard, where he earned his A.B. in Neurobiology with a minor in Music. After graduating, he joined a medtech company working on a brain-computer interface (BCI) for Dr. Stephen Hawking, before returning to academy to do a PhD in computational neuroscience. There, he was first in the world to demonstrate that non-invasive brain sensor data could be used to control individual neuroprosthetic fingers. In 2016, Dan co-founded Arctop to create general purpose BCI technology that unlocks human potential.

Arctop is a cognition company, founded to create widely accessible technology that profoundly improves human life. Arctop’s software applies artificial intelligence to electric measurements of brain activity in real time — translating people’s feelings, reactions, and intent into actionable data. Arctop’s clients include some of the most innovative organizations at the intersection of AI development and human experience, such as Endel (Apple Watch App of the Year), Stanford Medicine, and the U.S. Air Force Academy. The company has published in Frontiers in Computational Neuroscience, The Wall Street Journal, and been awarded multiple patents on its state-of-the-art methods of brain decoding.

Eros Marcello | Founder | black dream ai

Eros Marcello is a distinguished design engineer known for his expertise in advancing human-enhancing Artificial Intelligence and adjacent emerging technology.

Marcello’s specialization is in conversational interfaces, Large Language Models (LLMs), and multimodal human-machine interaction. His career includes pivotal roles at Apple, Meta (Facebook), Samsung, Salesforce and more, with seasoned experience across diverse sectors such as Federal Government + Defense, Healthcare + Pharmaceuticals, Higher Education, Consumer Electronics and Audio Technology.

In 2023, Eros founded black dream ai: A startup currently in stealth focused on developing AI-augmented neurotechnology. The firm recently announced 2 open-source projects: Ouija (a platform slated to serve as a “God View” for their brain health) and Converge (the first human-machine collaborative programming language for Neuromorphic Engineering).

Beyond Machine Learning, Eros is deeply invested in Quantum Computing, Ambient Intelligence, Computational Neuroscience and advanced DSP Pro-Audio Technology. He strives to synergize these domains into impactful innovation.

Eros Marcello is based out of NASA Ames Research Park in Silicon Valley, California with a satellite office at NASA’s Kennedy Space Center in Cape Canaveral, Florida.

Jamie Gale | Founder | Jamie Gale Music

Jamie Gale: Curator, Educator, and Visionary in the Guitar Industry.

Jamie Gale is a globally recognized thought leader in the guitar industry, known for curating the Boutique Guitar Showcase, hosting the podcast Life With Strings Attached, and consulting with top makers, brands, and organizations worldwide.

A sought-after speaker and educator, Jamie has lectured at institutions like Harvard University, The NAMM Show, Mondo NYC and La Biennale di Venezia.

His consultancy work focuses on brand development, business strategy, and global engagement.

Through cross-disciplinary exploration and a commitment to collaboration, Jamie Gale is redefining how we think about guitars and their place in the world of art, design, and music.

John Raymond Riley | Assistant General Counsel | U.S. Copyright Office

John Riley is an Assistant General Counsel at the Copyright Office where he has contributed to the Government’s briefs in the Petrella and Aereo Supreme Court cases, the Thaler AI-copyrightability case, the Copyright Small Claims and Copyright and the Music Marketplace policy reports, and, among other regulatory work, has authored rules implementing the Music Modernization Act and Copyright Alternative in Small-Claims Enforcement (“CASE”) Act of 2020.

Prior to joining the Office, John worked as the Senior Manager of IP Enforcement at the U.S. Chamber of Commerce. John earned his LL.M. in IP Law from the George Washington University Law School and his JD from the Dickinson School of Law. He holds BA degrees in Political Science, English, and Communication Arts & Sciences from Penn State University. He has been recognized by the American Intellectual Property Law Association for his distinguished service and contributions in the field of intellectual property law.

Paul Sitar (Moderator) | President, A3E | CEO, Sitarian Corporation

Paul began his professional career as an aerospace underwriter, commercial pilot, and certified flight instructor. However, his passion for information technology led him to transition into the field of emerging technologies that impact all aspects of business and life. He accomplished this by launching research projects, trade shows, and conferences for prominent organizations such as Gartner, Inc. and Advanstar Communications, Inc., as well as for several of his own ventures such as NlightN, Inc., Sitarian Corporation, A3E LLC, and the The Electric Vehicle + Energy Infrastructure Innovation & Sustainability Exchange™. These efforts and projects have included a global footprint.

Paul has focused his attention on several areas, including music and entertainment technology, electric vehicles and next-generation mobility, healthcare informatics, electronic commerce, artificial and creative intelligence, robotics, IT security, cyber terrorism prevention, and critical infrastructure protection.

Paul’s experience also includes founding and managing a software security company for seven years. During this time, he successfully patented and commercialized a deviceless one-time password authentication system. Today, Paul continues his work in research, trade show and conference creation, and the launch of innovative projects in technology driven industries.

Friday, January 24th, 2025; 1:00PM – 2:00PM Pacific Time; Hilton Anaheim; 4th Floor, Hilton Huntington Room

Top Producers: Managing the Ever-Growing Impact of AI Tools on Music Production & Engineering + An Open Discussion on the Future of Artificial Creativity

AI has become an essential part of the modern music producer’s toolkit, offering creative, technical, and organizational benefits. From automated mastering to intelligent sound design and live performance optimization, AI-driven tools are enabling producers to work more efficiently while expanding creative possibilities. These tools address the need for faster workflows, the demand for high-quality production, and the desire for more dynamic and personalized music experiences. Learning to leverage these technologies not only saves time but also enhances the creative process, allowing producers and engineers to focus on more strategic and artistic decisions.

The session will also explore how these engineering experts view the evolution of AI into Artificial Creativity (AC), examining the blurred lines between human creativity and machine-generated content, its potential impact on originality, and what this shift means for the roles of musicians, producers, and engineers in the creative process.

Sean Phelan | Producer/Engineer | Republic Records Studios

Lizzo, Shania Twain, Migos, Mary J Blige, CeeLo, Lil Nas X, multiple Grammy wins

Morning Estrada | Producer/Engineer | Republic Records Studios

Born and raised in east Los Angeles, Morning Estrada, has been an in-demand engineer for top artists for over a decade. Working with artist like Ye (Kanye west), Diddy, Ty $, Rosalia, Coco Jones Trinidad James, Jessie J, Lucky Daye, Travis Scott, Camper, Brandy and more.

Rob Christie (Moderator) | Republic Records* Studios Director | Universal Music Group

2 x Grammy Winning A&R Producer, former Staff Producer for EMI-Capitol Records.

*(Republic Records has been recognized by Billboard as the industry’s #1 label over the last 10 years. It is home to an all-star roster of multi-platinum, award-winning superstar artists such as: The Weeknd, Taylor Swift, Drake, Ariana Grande, John Legend, Post Malone, Metro Boomin, Stevie Wonder, among others.)

Tim Adnitt | Vice President of Product Management for Instruments & Sounds, Sound Platforms & Sound Design | Native Instruments

Tim is Vice President of Product Management for Instruments & Sounds, Sound Platforms and Sound Design at Native Instruments. Based in London and Berlin, he has more than twenty-five years’ experience in the Music Technology industry, having held positions at Sibelius Software and Avid before joining Native Instruments in 2013. Tim is a long-standing advocate for accessibility in Music Technology, co-designing the Kontrol keyboard accessibility features and regularly speaking on the topic at global industry events including NAMM, the Audio Developer Conference and Moogfest. He is a member of the Board of Directors for IMSTA and a member of the Board of Trustees for creative arts company and charity Heart n Soul.

Tim remains active as a Composer and Producer / Engineer. Theatre and dance credits include Remote (Royal opera House), Forest Sale (Royal Opera House) and The Seekers (Royal Opera House), and with Django Bates Titus Andronicus (Shakespeare’s Globe), House of Games (Almeida Theatre) and Julius Caesar (Royal Shakespeare Company), as well as orchestral projects with London Sinfonietta, Britten Sinfonia, Duisburg Philharmonic, Metropole Orchestra and ASKO Ensemble.

Production and engineering credits include Tenacity by Django Bates & The Norrbotten Big Band (Jazzwise #2 Jazz Album of the Year, The Guardian #2 Jazz Album of the Year, Downbeat Magazine Best of 2021: Masterpieces), Saluting Sgt Pepper by Django Bates, Frankfurt Radio Big Band & Eggs Laid By Tigers (The Times & The Sunday Times #1 Jazz Album of the Year), Solo Gemini by Nikki Yeoh, Days Distinctive by Attab Haddad, and Dancing on Frith Street – Loose Tubes (Jazzwise #1 Reissue & Archive Album of the Year).

Friday, January 24th, 2025; 2:00PM – 3:00PM Pacific Time; Hilton Anaheim; 4th Floor, Hilton Huntington Room

A Legal Perspective & Discussion on the Future of Music, Audio and Vocal Creation in the Age of Deepfake Technologies

The legal landscape around deepfakes in the United States is rapidly evolving, but currently, there is no comprehensive federal law explicitly banning or regulating deepfakes. Instead, various efforts are being made at both the federal and state levels to address the threats posed by this technology. Overall, deepfake laws in the U.S. remain fragmented, with states leading the charge while federal legislation is still in development. Many of the laws target specific issues like election interference, fraud, and non-consensual sexual content. A few noted efforts are the DEEPFAKES Accountability Act, the DEFIANCE Act of 2024, as well as state’s like Texas, Florida, California, New York, that are also mainly focused on elections and sexual content. To note, Tennessee passed the ELVIS Act, which protects individuals’ likenesses from being used without consent, particularly targeting AI-generated audio mimicking voices.

For musicians, audio and content creators, emerging laws regulating deepfakes and AI-generated content and voices could have significant implications, both positive and negative. Here are some potential impacts to discuss: Protection of Likeness and Creative Works; Restrictions on AI-Generated Content; Watermark and Disclosure Requirements; and Economic Impact, Revenue and Licensing. The previous will bring new layers of legal compliance that musicians and content creators will need to navigate. Join this A3E session for a provocative look at this emerging challenge.

Rachel Stilwell (Moderator) | Owner/Founder | Stilwell Law

Rachel Stilwell is the Owner/Founder of Stilwell Law, a music and intellectual property firm based in Los Angeles. Rachel’s law practice focuses on entertainment, intellectual property, licensing, and commercial transactions. She advises and negotiates on behalf of businesses and creative professionals on complex intellectual property matters and business transactions. Stilwell Law serves businesses and individuals from all industries, with special expertise in entertainment and professional audio. Rachel is proud to have been named to Billboard’s Top Music Lawyers List for the last six consecutive years. Prior to becoming an attorney, Rachel held executive positions with several top record labels, including Verve Music Group, where she ran Verve’s multi-format radio promotional department, supervising all radio activities and promotional tours. Rachel is Co-Chair of the Recording Academy Los Angeles Chapter’s Advocacy Committee. She also serves on the national advocacy committee of the Songwriters of North America.

Ray Parker, Jr. | American Singer, Songwriter & Guitarist

Ray Parker, Jr. is an acclaimed American singer, songwriter, and guitarist best known for his hit song “Ghostbusters,” the theme for the 1984 film of the same name. Born in Detroit, Michigan, Parker’s musical career spans decades, beginning as a session guitarist for platinum-selling artists like Stevie Wonder and Barry White. His distinctive blend of funk, R&B, and pop led to solo success in the late 1970s and early 1980s.

Parker’s hits “You Can’t Change That.” “I’m in Love With The Other Woman” and his chart-topping “Ghostbusters” solidified his place in pop culture. Beyond his own career as an artist, Parker’s songwriting and production work for artists like Aretha Franklin and the Spinners have earned him recognition in the music industry. A multi-talented artist, Parker’s legacy extends beyond music to television and film, where his infectious sound and catchy hooks have made him a beloved figure in entertainment.

Kevin D. Jablonski | Partner, Chair Electrical/Computer Science Patent Practice Group | Fisher Broyles, LLP

Kevin Jablonski serves as chair of the FisherBroyles Electrical/Computer Science Patent Practice Group. Kevin is a registered patent attorney with a focus on patent portfolio development and patent prosecution. Kevin works with a number of clients across myriad technologies with a lean toward electrical engineering and computer science. Kevin’s practice involves additional aspects of intellectual property law including IP licensing, copyrights, and trademarks. He has experience in technology areas such as semiconductor design, analog and digital circuits, and computer architecture solutions. Kevin has also developed a technical focus in the audio arts and sciences and audio-related technologies. Kevin owns a professional-grade recording studio called Hollywood and Vines. Kevin also manages and plays drums in two bands: Whiskey Gaels and The Soul Proprietors. This music arts background and interest allows Kevin to branch out from patent prosecution into entertainment law to help fledgling artists and music technology companies navigate confusing landscape.

John Raymond Riley | Assistant General Counsel | U.S. Copyright Office

John Riley is an Assistant General Counsel at the Copyright Office where he has contributed to the Government’s briefs in the Petrella and Aereo Supreme Court cases, the Thaler AI-copyrightability case, the Copyright Small Claims and Copyright and the Music Marketplace policy reports, and, among other regulatory work, has authored rules implementing the Music Modernization Act and Copyright Alternative in Small-Claims Enforcement (“CASE”) Act of 2020.

Prior to joining the Office, John worked as the Senior Manager of IP Enforcement at the U.S. Chamber of Commerce. John earned his LL.M. in IP Law from the George Washington University Law School and his JD from the Dickinson School of Law. He holds BA degrees in Political Science, English, and Communication Arts & Sciences from Penn State University. He has been recognized by the American Intellectual Property Law Association for his distinguished service and contributions in the field of intellectual property law.

Friday, January 24th, 2025; 3:00PM – 4:00PM Pacific Time; Hilton Anaheim; 4th Floor, Hilton Huntington Room

Deepfakes and AI: Emerging Solutions for Artists, Musicians, and the Entertainment Industry

Join A3E as we explore the rapidly advancing field of deepfake technology, where artificial intelligence is used to create hyper-realistic audio and visual content that is nearly indistinguishable from reality. This groundbreaking innovation, while showcasing the potential of AI, also poses significant challenges to the entertainment industry, particularly for artists, musicians, and content creators.

This session will delve into the ethical implications of deepfake technology, the legal challenges in combating it, and strategies for protecting original content in an age where digital manipulation is more accessible than ever. Join us to better understand the growing risks posed by deepfakes and discuss emerging solutions to safeguard the integrity of artistic expression and entertainment in the AI and AC era.

Virginie Berger | Chief Business Development and Rights Officer | MatchTune

Virginie Berger, a seasoned professional in the music and tech industries, is renowned for her expertise in music business innovation and integrating artificial intelligence in music strategy and rights management. Her impressive 20-year career includes pivotal roles at Downtown Music-Songtrust, Armonia, Myspace, and Microsoft and her own company Don’t believe the Hype, where she played a key role in generating revenue, forging strategic alliances, and championing artists’ rights. Known for her work as a curator, writer, professor, and artist advocate, Virginie has a rich background in music business and rights innovation. She now extends her expertise as the Chief Business Development and Rights Officer at MatchTune, where we are pioneering advanced audio fingerprinting technology based on AI algorithms to detect all types of copyright infringement, AI-driven audio modifications, voice cloning, and AI deepfakes.

Roman Molino Dunn | Music Technologist | AudioIntell.ai

Roman is a film composer, music technologist, Billboard-charting producer, and entrepreneur. He has scored films and shows for Netflix, HBO, Disney, Paramount, and Hulu, and founded Mirrortone Recording Studios in NYC. As a music software developer, Roman founded The Music Transcriber (now Composer’s Tech), creating innovative music algorithms acquired by leading companies.

At AudioIntell.ai, he leads the development of AI systems for audio detection and analysis, focusing on detecting AI-generated audio and providing insights across industries like security and entertainment. He also serves as a Senior Operating Partner at Ulysses Management’s Private Equity Group, specializing in music technology investments, and is a co-owner of Antares Audio Technologies.

Eric R. Burgess | Founder/CEO | Credtent

Eric “E.R.” Burgess is the CEO of Credtent.org, a Public Benefit Corporation empowering creators to reclaim control of their content in the Age of Al. With a career spanning journalism, video game design, SaaS innovation, and marketing technology, Eric is a recognized leader in creativity, technology, and content authenticity. At Disney, he spearheaded the Who Wants to Be a Millionaire video games, introducing early downloadable content and selling over 4 million copies. He later led innovations in SaaS at SmartOffice, drove content marketing breakthroughs at PublishThis, and developed industry- first tools like the Earned Media Value Index at a.network. A prolific writer and creator rights advocate, Eric combines innovative storytelling and bleeding-edge technology to inspire a more authentic and financially inclusive digital landscape.

Credtent is a B Corp bringing creativity and Al together ethically and credibly. Our platform empowers creators to exclude their work or profit from Al by setting fair licensing terms for responsible Al companies. Creators can register any type of creative work for Al protection or licensing with our low-price, high-tech solution. Our Content Origin and Sourcing Badges have set industry standards for Al transparency and ethical training. Our Credibility Science enables Al tools to train their models on truly legitimate and accurate content to maximize efficacy, minimize business risk, and increase Al acceptance by creators across the artistic spectrum.

Tony Cariddi (Moderator) | VP Sales & Marketing | PreSonus

Tony Cariddi is a lifelong music and media technology enthusiast who’s led sales and marketing organizations at large and small companies for over 25 years. He’s currently the VP of Sales & Marketing at PreSonus. Previously, Tony led sales and marketing teams at Avid, Dolby, Apogee and various startups. In those roles, he’s led organizational transformations into new business models, technology partnerships, and marketplaces.

Friday, January 24th, 2025; 4:00PM – 5:00PM Pacific Time; Hilton Anaheim; 4th Floor, Hilton Huntington Room

A3E from Studio to Stage: Advanced Remote Collaboration, Real-Time Content Creation and the Ability to Deliver Next-Generation Entertainment Experiences

The evolution of low-latency synchronized audio technologies, coupled with the growing demand for real-time online collaboration in music, audio, and other creative content, has become a crucial objective for musicians and artists. This A3E session explores the exciting and powerful partnerships between emerging companies and technological giants aimed at advancing and improving the creative process, from studio production all the way to live performances.

The applications are clear: in gaming and eSports, real-time audio synchronization enhances team communication, giving players a competitive edge. In music collaboration, it enables real-time songwriting and online jam sessions. For broadcasting, it streamlines live production by facilitating faster group communication. And in the emerging frontier of the Metaverse and online concerts, it supports real-time group audio interactions between performers and audiences. Discover how music technologists are collaborating to usher entertainment into the next era.

Matthew Azzarto | Sr Manager, Solution Architecture | AWS

Matt is currently Sr. Manager of Solutions Architecture at AWS where he leads a team of architects supporting key accounts globally. He has 20+ years of experience in the Media & Entertainment Industry, leading teams at the forefront of digital and cloud, transformations. He has delivered results on key strategic initiatives working at Disney, Hulu, HBO, Akamai and NBCUniversal. Prior to working on the content production and distribution side, Matt was a recording artist signed to MCA-Universal. He has had 2 music publishing deals and several songs placed in film and television. Matt is also a co-owner of a recording studio where he is working to integrate new technology like cloud-based services and collaborative software to drive accelerated content creation for artists and labels.

Hadi Nsouli | Sr Business Development Manager | T-Mobile

I am a product management professional who changed roles to work in business development here at T-Mobile Wholesale. T-Mobile enables me to create new MVNOs and IoT solutions with my partners for their customers.

I like to learn what my prospect’s business needs are, create innovative solutions with them and take them to market. What we have to offer is all about what the prospect is trying to accomplish. Think of it as a blank slate, build your own product space, I can help.

I started my career at a startup, I do not presume that I know what product will and will not succeed, but I am all about finding that out.

Julian McCrea (Moderator) | CEO | Open Sesame Media

Julian leads Open Sesame Media a B2B CPaaS (communication platform as a service) venture providing low latency synchronized audio over 5G to application developers via its patent-pending SyncStage platform. In short, they ‘sync’ a group of up to 8 devices at extremely low latency (8 times faster than Google Meet, 5 times faster than Discord) to enable instant synchronized digital audio experiences. His company just completed the world’s first music collaboration between London, New York and Toronto using SyncStage with Verizon, Rogers and Vodafone – called the ‘5G Music Festival’. They are working with major telecoms across the globe to bring their technology to market and are receiving application developer demand and in music, broadcast, gaming, metaverse, faith and music education verticals. They are headquartered in Los Angeles, CA.

Friday, January 24th, 2025; 5:00PM – 6:00PM Pacific Time; Hilton Anaheim; 4th Floor, Hilton Huntington Room

A3E from Studio to Stage: “Dynamic Audio” – Utilizing AI and Artificial Creativity to Capture Music’s Infinite Spectrum from Creation to Monetization

The future of music creation, performance, and distribution is expanding exponentially due to AI and AC technologies. These tools allow artists to take a single track and transform it into countless versions that evolve based on the context, live performance, or even audience interaction. The use of AI in meta-tagging, live remixing, adaptive composition, and real-time feedback enables a creative fluidity between studio recordings and live performances, offering a dynamic spectrum for both artists and fans to explore. As these technologies continue to advance, artists will have unprecedented freedom to create in the space between live performance and traditional recording; to innovate new products and experiences that blend the excitement of live performance with the accessibility and reach of conventional recording.

James Hill | Co-founder | ever.fm

James Hill is the co-founder and Artistic Director of ever.fm, the platform where songs change every time they’re played. Hill is an award-winning performer and songwriter, a producer and an educator based in Atlantic Canada. His latest musical project is Champagne Weather, whose semi-improvised style inspired the creation of the ever.fm audio engine. Hill and his collaborators are innovating audio formats that thrive in the space between live performance and traditional recording.

Mansoor Rahimat Khan | Founder & CEO | Beatoven.ai

Mansoor Rahimat Khan is the Founder and CEO of Beatoven.ai. He is a Forbes 30 under 30 Asia in consumer tech. He comes from a family of musicians that has pioneered sitar music for 7 generations. With a combined experience in tech and music, Mansoor is an alumni of the Georgia Tech Centre for music technology, one of the most reputed music tech labs in the world and has worked extensively across startups in product and tech roles. His last stint was a senior product manager at a startup which was acquired for 600 million$. Mansoor has experience building across early stage and late stage and is currently leading Beatoven.ai and has been able to scale the product to 1.5 million users with US and Europe being primary markets. Mansoor also has an extensive network of artists across the world that aids towards the efforts of building the company.

Beatoven.ai is an AI music generator for content creators to compose original royalty free background music.Users can input prompts in the form of text, audio or video and generate original music and can further edit their output. The company is backed by marquee investors from Asia and Europe and is amongst the top 5 AI music players in the world. The company is also amongst the top 200 AI tools in the world as per Generative AI. The company has pioneered ethical AI by training their models on artists data through consent and licensing music from hundreds of artists and has been certified by Fairly Trained and AI4Music.

Grayson Sanders | CEO | Chordal

Grayson is the Co-Founder & CEO of Chordal. He is also a composer and co-founded the Emmy winning music agency Safari Riot.

Chordal is a fast-growing music sync platform with a revolutionary collaborative rights technology.

David Ronan | Founder and CEO | RoEx

David Ronan is the Founder and CEO of RoEx, and is deeply committed to revolutionising the creator industry through AI-driven mixing & mastering solutions. Holding a PhD from Queen Mary University of London’s Centre for Digital Music (C4DM), his research has pioneered groundbreaking AI techniques in audio production. He also led the research department at AI Music, a venture that caught Apple’s attention and led to an acquisition. His multifaceted passion for music, audio signal processing, and artificial intelligence has been the driving force behind RoEx. His mission is to democratise audio production and empower artists of all skill levels to produce top-quality work. He is unwaveringly dedicated to enriching the global community of music creators and is actively reshaping the future of media creation.

RoEx is a London-based startup transforming audio production with advanced AI solutions that unlock creative potential and democratise sound quality. Founded by Dr. David Ronan and Prof. Joshua Reiss (co-founder of LANDR) as a Queen Mary University of London spin-out, RoEx builds on cutting-edge audio mixing research. Its consumer tools, Automix and Mix Check Studio, have improved over 1.2 million tracks. For B2B, RoEx’s Intelligent Audio Engine, Tonn, powers platforms like UnitedMasters and Music AI, integrating state-of-the-art mixing and mastering tools that enable distributors, labels, and creators to deliver exceptional audio quality at scale.

Jonathan Rowden (Moderator) | CEO and Co-Founder | Hyperstate Music, Inc

Jonathan Rowden is a professional musician, composer/producer and saxophonist, who is now a visionary leader and organizer at the intersection of emerging technology and creativity. Founder of Overworld, an immersive production studio, former co-founder of GPU Audio, & current CEO and co-founder of Hyperstate Music (with Louis Bell, RIAA legend and executive producer to Post Malone). He is also a strategic advisor with numerous visionary audio and tech companies such as SAWFT, Ultimate AI (infamous creator of Pookie Tools), and Mntra IO.

Hyperstate Music, which he cofounded with Bell, Joshua Aven and Joseph Miller (along with Stability fellow Scott Hawley) is driving industry vision for human-centric AI use, at the cutting edge of research and productization. Focused on leveling-up humans with AI enrichment, Hyperstate powers up human users’ ability to thrive at the center of their own personal creative flow. Hyperstate launches in 2025 with groundbreaking tools and workflows inspired and powered by Bell’s creative instincts used with the top artists in the industry (Swift, Malone, Cyrus, Cabello etc), helping users unlock their inner genius - rather than replacing them - and accelerate towards incredible songwriting and production.

Saturday, January 25th, 2025; 10:00AM - 11:00AM Pacific Time; Hilton Anaheim; 4th Floor, Hilton Huntington Room

Navigating AI's Transformative Impact on Content Creation in Gaming and Game Audio

As AI continues to evolve, content creators in the video game and game audio industries face both unprecedented opportunities and looming threats. This session explores how AI tools are streamlining content production, from procedural world generation to adaptive audio design, while also raising concerns over job displacement, creative control, and intellectual property. A3E and our panel of experts will help you gain insights into leveraging AI to enhance creativity and efficiency without compromising artistic integrity, and strategies for staying competitive in an increasingly automated landscape.

Joe Kataldo | Composer & Sound Designer | Mad Wave Audio, LLC

Joe Kataldo is a composer and sound designer with a rich career spanning over a decade. He boasts a prolific portfolio of work that includes contributions to notable projects such as "Archer: Danger Phone," “H3VR” and the Team Fortress 2 VR parody, "Terminator Salvation VR Ride", the recent remaster of "XIII", "The Last Night", and “X8”. Notably, his score for "Blind Fate: Edo No Yami" earned a nomination for Best Video Game Score at the Hollywood Music in Media Awards in 2022.

As the owner of Mad Wave Audio, LLC, Joe has crafted audio for countless Toyota commercials and provided immersive VR audio for theme parks like Songcheng's "Dreamland" in China. He holds degrees from Berklee College of Music in Boston and the Music Conservatory of Napoli in Italy. In addition to his prolific work, Joe Kataldo shares his expertise by teaching a post-graduate masterclass on game audio for ADSUM.it.

Cody Matthew Johnson | CEO, Creative Director | Emperia Sound and Music / Emperia Music Group

Cody Matthew Johnson is an award-winning music producer, songwriter, and composer who is known for his irreverent and creative fusion of contemporary styles on some of the world’ largest video game titles and franchises, such as: Star Wars Outlaws, Marvel Rivals, Trek to Yomi, Bayonetta 3, Resident Evil 2, and Devil May Cry 5, and more.

Founded in 2019, Cody is the CEO, Creative Director, and Co-Founder of the Emperia Group, a multi-division full stack interactive audio solutions company that includes Emperia Sound and Music, Emperia Records, and Emperia Music Group. After its conception, Emperia Sound and Music expanded into new divisions vertically integrated across sound and music for interactive media to provide an end-to-end approach beginning with conceptual pre-production through production, post-launch support, and music rights management through Emperia Music Group.

Linnea Sundberg (Moderator) | Chief of Staff to the CEO | UnitedMasters Inc.

For the last ~15 years, Linnea Sundberg has operated at the intersection of music and technology. She currently serves as Chief of Staff to the CEO at UnitedMasters Inc., a leading label services and platform for independent artists, where she is also responsible for Corporate Development. Previously, Linnea spent over 6 years at Spotify. There, she developed and took artist-facing products to market, including commercial strategies, and go-to-market plans for the Spotify for Artists brand. She’s also an advisor (Soundverse), and investor (the Dematerialised), and has held previous Corporate Development roles (Splice). Her expertise lies in artist services, copyright and IP value, streaming market insights, and artist services. Originally from Stockholm, Sweden, she holds a Bachelor's degree from Harvard University and a Master's degree from the University of Oxford. An advocate for innovation in the creator economy, Linnea is passionate about supporting the next generation of artists through technology-driven solutions.

Josh Andres | President of Josh Andres Music, Composer, Sound Design Orchestrator, Conductor | Josh Andres Music

Josh Andres is a composer, conductor, sound designer, and musician based in Los Angeles, CA. He has established a signature cinematic sound that seamlessly weaves orchestral and electronic music. His sound has been described as a blend of Ennio Morricone, John Carpenter, Quincy Jones, and more. He has worked with the national orchestras of Costa Rica and Czechia and performed at renowned festivals such as The Governors Ball and Electric Daisy Carnival, captivating audiences with his genre-defying performances. His impressive portfolio includes original compositions with Microsoft, Lunacy Games, Jubal Games, Fox Networks, Netflix, Viacom Networks, and more. Josh and his company have also forged collaborative partnerships with industry giants such as Pioneer, Roland, Serato, Monster Energy, and Samsung, contributing their exceptional talents to various projects.

Josh Andres Music, founded by composer and sound designer Josh Andres, is a Los Angeles-based company celebrated for its signature cinematic sound. Known for captivating audiences with genre-defying performances, the company has collaborated with prestigious orchestras, including the national ensembles of Costa Rica and Czechia, and appeared at world-renowned festivals like The Governors Ball and Electric Daisy Carnival. Josh Andres Music boasts an impressive portfolio featuring original compositions for Microsoft, Netflix, Fox Networks, Viacom, and acclaimed game studios such as Lunacy Games and Jubal Games. The company has also partnered with industry leaders like Pioneer, Roland, Serato, Monster Energy, and Samsung. With its innovative approach, Josh Andres Music continues to set the standard in the realms of scoring, sound design, and live performance.

Saturday, January 25th, 2025; 11:00AM - 12:00PM Pacific Time; Hilton Anaheim; 4th Floor, Hilton Huntington Room

Artificial Creativity (AC): the Intersection of Content Creation with AI, Neuroscience, and Human-Centric Design & Creativity: Part 2

As artificial intelligence technology delves deeper into neuroscience, it opens up new possibilities through neuroscience-driven insights into brain activity and physiological data. Content creators (as well as “other parties”) now have the opportunity to harness this neurodata to craft personalized and adaptive experiences like never before. In parallel, AI will inevitably use this neurodata, and even create its own “synthetic” neurodata, and this is what A3E deems as Artificial Creativity (AC). This evolution is transforming music, video, gaming, film, and social media content. From AI-generated music that adapts to the listener’s mood, and video content that responds to real-time emotional cues, to social media algorithms designed to maximize engagement using neurodata, and immersive games that react to players’ brainwaves—AI and AC are revolutionizing the future of content creation. The opportunities for innovation are vast, especially possibilities when applied to health-focused content that adjusts based on real-time stress or mood.

However, these advancements also present significant challenges, including concerns over data sets/data ownership, intellectual property, data privacy risks, potential manipulation, the risk of formulaic content, commercialization of human expression, and the devaluation of human creativity in favor of data-driven design. The ethical dilemmas content creators must navigate are profound. Join this A3E discussion to explore these possibilities and challenges, leaving you with critical new insights and questions about the future of content creation and entertainment technology.

Maya Ackerman, PhD. | CEO/Co-Founder | Wave AI

Dr. Maya Ackerman is a trailblazer in generative AI, serving as CEO and co-founder of WaveAI, a pioneering startup transforming music creation. An Associate Professor at Santa Clara University, Dr. Ackerman has researched generative AI for text, music, and art since 2014. Ackerman was an early advocate for human-centered generative AI, bringing awareness to the power of AI to profoundly elevate human creativity. Under her leadership, WaveAI has emerged as a leader in musical AI, benefiting millions of artists and creators with their products LyricStudio and MelodyStudio. Named a "Woman of Influence" by Silicon Valley Business Journal, she’s been featured in major media and speaks on global stages like the United Nations, Oxford, IBM Research and Stanford University.

Simon Cross | Chief Product Officer | Native Instruments

Simon is the Chief Product Officer at Native Instruments. He leads the product, design, sound design and partnerships teams across Native Instruments, iZotope, Plugin Alliance, and Brainworx. He supports the teams developing new products and services that empower and enable creators to express themselves through sound.

Simon has a lifelong passion for music and creativity: He started DJing at the age of 8, and grew up playing Jazz drums with both big bands and small groups.

Prior to Native Instruments, Simon spent 12 years at Meta where he built communication and developer platforms, and applied cutting edge AI technology to detect and reduce online abuse. Before that, he held several senior engineering and product roles at the BBC and in commercial radio.

Daniel Rowland (Moderator) | Head of Strategy and Partnerships | LANDR

Daniel Rowland is a unique combination of audio engineer, music producer, tech executive, and educator. He is Head of Strategy and Partnerships at LANDR Audio, co-founder of online DAW Audiotool, and longtime instructor of Recording Industry at MTSU. For nearly a decade, his primary focus has been on the empowerment of music creators via LANDR’s ethical AI-driven tools and accessible design, sharing that message on dozens of panels and podcasts.

As a creative, he has worked on Emmy/Oscar-winning and Grammy-nominated music projects totaling 13+ billion streams for Disney/Pixar, John Wick, Star Wars, Marvel, Jason Derulo, Seal, Nine Inch Nails, Nina Simone, Flo Rida, Adrian Belew, Burna Boy, and hundreds of others.

Jessica Powell | CEO | AudioShake

Jessica Powell is the CEO and co-founder of AudioShake, a sound-splitting AI technology that makes audio more interactive, accessible, and useful. Named one of TIME’s Best Inventions, AudioShake is used widely across the entertainment industry to help give content owners greater opportunities and control over their audio.

Powell spent over a decade at Google, where she sat on the company's management team. She began her career at CISAC, the International Society of Authors and Composers in Paris. Her work has been published in the New York Times, TIME, WIRED, and elsewhere.

Marina Guz | Chief Commercial Officer | Endel

Marina Guz is the Chief Commercial Officer at the sound wellness company Endel. With over a decade of experience in the tech and music industries, Marina leads Endel's record label business and spearheads cutting-edge collaborations between artists and AI. She has successfully signed deals with Universal Music, Warner Music, and Amazon Music, collaborating with renowned artists like James Blake, Grimes, and Sia.

Endel is a pioneering sound wellness company that creates AI-driven, personalized soundscapes designed to reduce stress, improve sleep, and boost productivity. By adapting to real-time inputs such as the time of day, weather, and heart rate, Endel's technology delivers tailored soundscapes to meet the listener's immediate needs. Backed by neuroscience, Endel enables artists to collaborate with AI, blending technology, art, and science to craft wellness music that enhances daily life.

Saturday, January 25th, 2025; 12:00PM - 1:00PM Pacific Time; Hilton Anaheim; 4th Floor, Hilton Huntington Room

Launching AI Onto the Live Stage: Jordan Rudess on the Future of Generative AI for Live Music Performances: Artist, Engineering and AI, “The Nexus of Art + Science + Technology™”:

Join A3E for a discussion and demonstration of how AI can augment live music performances to promote artist creativity and bring novel experiences to audiences. As Generative AI slowly becomes more pervasive in the music industry, it becomes more important than ever to properly define the role the technology plays in constructively collaborating with musicians, composers, and producers.

In a recent collaboration, renowned musician Jordan Rudess and the MIT Media Lab have been exploring the usages of Generative AI as a musical improvisation partner for live music performances. Using powerful generative AI models optimized for real-time interaction and specifically trained on Rudess’s data, they were able to develop a synthetic improvisation partner allowing Rudess to duet with his AI counterpart. To embody this non-human musical agent on stage, they designed a 16-foot-high kinetic sculpture that bridges the gap between the audience and the AI.

Jordan Rudess | Musician/Technologist | Jordan Rudess Music

Jordan Rudess is the renowned keyboardist and multi-instrumentalist for the Grammy-winning, platinum-selling progressive rock band Dream Theater. A former classical prodigy, he began studying at Juilliard at age 9 and later blended his classical roots with rock. In addition to his work with Dream Theater and Liquid Tension Experiment, Jordan has released solo projects, including the orchestral symphony Explorations and a recent album on Sony Inside Out. His collaborations span artists like Deep Purple, Steven Wilson, David Bowie, and Enrique Iglesias.

As a technology pioneer, Jordan is a Visiting Artist at MIT's Media Lab, working on AI-driven interactive projects, and has served as an artist-in-residence at Stanford’s CCRMA. Founder of Wizdom Music, he developed award-winning music apps like GeoShred, MorphWiz, and SampleWiz. Known as a tech influencer, he supports brands such as Moises, Roli Labs, Lava guitars, and Lightricks.

Joseph A. Paradiso | Alexander W Dreyfoos Professor | MIT Media Lab

Joe Paradiso directs the Responsive Environments group at the MIT Media Lab. He received his PhD in Physics from MIT in 1981 and a BSEE from Tufts University in 1977, then joined the Media Lab in 1994 after developing spacecraft control and sensor systems at Draper Laboratory and high-energy physics detectors at CERN Geneva and ETH Zurich. He is a pioneer in the development of the Internet of Things and renowned for work in wearable sensing systems, energy harvesting technology, and electronic music controllers. His current research broadly explores how sensor networks and AI augment and mediate human experience, interaction and perception. This has encompassed wireless sensing systems, wearable and body sensor networks, ubiquitous/pervasive computing, human-computer interfaces, space-based systems, sensate materials, digital twins in virtual worlds, and interactive music/media. He has written more than 400 articles and papers and holds over 25 patents in these areas. Joe has also been designing, building, and using his own electronic music synthesizers since the early 1970s. He has always enjoyed composing electronic soundscapes and seeking out edgy and unusual music from around the world.

Perry Naseck | Research Assistant | MIT Media Lab

Perry Naseck is an artist and engineer working with interactive, kinetic, light- and time-based media. He specializes in interaction, orchestration, and animation of systems of sensors and actuators for live performance and permanent installation. Perry completed a Bachelor's of Engineering Studies and Arts at Carnegie Mellon in 2022 where he studied both Art and Electrical & Computer Engineering. He worked at the Hypersonic studio in Brooklyn, NY where he created large interactive, kinetic, and light public art installations found all over the U.S. and abroad. Perry is Research Assistant in the Responsive Environments group at the MIT Media Lab where he focuses on creating new experiences that bridge the gaps between musicians, improvisation, and audiences.

Lancelot Blanchard | Research Assistant | MIT Media Lab

Lancelot Blanchard is a musician, engineer, and AI researcher pursuing a PhD at MIT Media Lab’s Responsive Environments group. His research explores the potential for generative AI systems to enhance the creative processes of musicians, with a special focus on live performances. Lancelot has collaborated with multiple artists to create innovative tools for real-time AI-augmented music making. Lancelot holds a Master's of Research in Artificial Intelligence and Machine Learning and a Master's of Engineering in Computing from Imperial College London, as well as a degree in classical piano from the Conservatory of Rennes, France.

Saturday, January 25th, 2025; 1:00PM - 2:00PM Pacific Time; Hilton Anaheim; 4th Floor, Hilton Huntington Room

Technologies, Trends and Forces Shaping the Future of Music and Creative Education

Emerging technologies, such as AI and AC, are transforming the way musicians and content creators learn and innovate, opening up new avenues for personalized and adaptive instruction. This A3E session will explore how innovative tools are enhancing education by providing real-time feedback, generating creative content, and offering immersive learning experiences through cutting-edge programs and techniques. We will examine the key factors driving these changes, including the growing demand for scalable, individualized learning, the integration of cross-disciplinary skills, cultural competence, and the shift toward experiential learning. Expert discussions will provide insights into both the opportunities and challenges—such as data privacy and the potential devaluation of human creativity—that these advancements bring to creative education, as well as trends shaping the future.

Michele Darling | Chair, Electronic Production and Design (EPD) | Berklee College of Music, Boston

Michele Darling, is an accomplished sound designer, composer, recording engineer, and educator. Her work – always rooted in the possibilities of sound – aligns futuristic thinking with music production and sound design. From national television shows and video games to electronic toys and music software, Michele shares her expertise across a range of media types and platforms and her work can be heard among Emmy-winning entertainment, on the top 100 most influential toys list, and throughout multi-genre music productions worldwide. Michele is currently the Chair of the Electronic Production and Design Department at Berklee College of Music in Boston, MA. She leads the EPD department in educating students in electronic music production, sound design, sound for immersive platforms such as video games and mixed reality, new musical instrument design, and electronic music performance.

Gillian Desmarais | K-12 Music Production and Engineering Teacher | Maplewood Public Schools/District 622

Gillian Desmarais was recently named 2024 National TI:ME Technology Teacher of the Year and a 2024 Yamaha “40 Under 40” Music Educator. She currently teaches K-12 Music Production and Engineering at Maplewood Public Schools in Maplewood, MN. Gillian has been nationally recognized for her work in electronic composition and is an active clinician and adjudicator for state and international music conferences and competitions. She has collaborated with companies such as Ableton Live, Yamaha, Zildjian, Novation, and Jamstik to design new music technologies and curriculum for the classroom. As a music technology graduate student at New York University, she continues to perform IRB research in collaboration with Dr. Alex Ruthmann, Director of the NYU MusEDLab, for developing curriculum, new music ensembles and web applications.

Jonathan Wyner | President | M Works Studios

Jonathan Wyner is founder/chief engineer at M-Works Studios. A musician, educator, performer, and consultant, he has mastered and produced more than 5,000 recordings over 40 years. His credits include James Taylor, David Bowie, Aerosmith, Kiri Te Kanawa, Aimee Mann, Miles Davis, Thelonius Monk, Pink Floyd, Josh Groban, Bruce Springsteen, and Nirvana.

As a Professor of Music Production and Engineering at Berklee College of Music, Jonathan is at the forefront of inspiring and educating the next generation of music professionals. Past - President of the Audio Engineering Society and Education Director at iZotope, he has consistently bridged the gap between cutting-edge technology and the art of music creation.

Jonathan brings a wealth of experience as a product consultant, where he leverages subject matter expertise to help craft immersive, user-centered experiences for both creative and technical users alike. His work is a fusion of artistry and technology, driving forward the future of music production and the tools that shape it.

Andrew Hutchens | Assistant Professor of Music, Coordinator of Music/Music industry | Benedict College

Dr. Andrew Hutchens is currently an Assistant Professor of Music and Coordinator of Music/Music Industry at Benedict College. In this role, he is dedicated to providing transformative learning experiences for his students through courses in applied woodwind, music technology, music business, commercial music ensemble, entrepreneurship, and more. Many of his students have participated in internships and mentorships with organizations such as V12 Worldwide, Mezz Entertainment, Zync Music, Well Dunn, and the HBCU in LA program. Additionally, Dr. Hutchens serves on audio advisory councils and has fostered partnerships between Benedict College's program and companies including Splice, Muhammad Ali Center, The Ray Charles Foundation, Yamaha, and American Music Supply. His research has been featured by Apple Education and at conferences such as the NAMM Show and the NCMEA conference. Dr. Hutchens holds degrees from the University of South Carolina and Western Carolina University. He regularly performs and commissions new works for saxophone and mixed chamber ensemble.

Chrissy Tignor | Producer, Engineer, Educator, Content Creator | Young Producers Group

Chrissy Tignor is an innovative educator, audio engineer, producer, and content creator dedicated to empowering music creators through accessible education. Through her social media (@datachild), Chrissy inspires her audience with flea market audio finds and music production tips. She spent eight years as a professor at Berklee College of Music, and she currently collaborates with Young Producers Group on marketing and content strategy. During her time as Splice’s Education Director, she advanced music technology education by launching their inaugural student discount program and collaborating with award-winning producers, brands and institutions. Chrissy started the Audio Upcycle project in the Dominican Republic, creating a recording facility from donated equipment for students at the National Conservatory of Music. A sought-after professional, she has worked with the likes of Spotify, iZotope, Audio Technica and more, and has shared her knowledge at institutions around the globe.

Saturday, January 25th, 2025; 2:00PM - 3:00PM Pacific Time; Hilton Anaheim; 4th Floor, Hilton Huntington Room

An A3E DeepDive - “Three Masters: One World”: How MIDI is Driving Global Content Creation and Collaboration Through the Universal Language of Music

As both an association and a global standard, MIDI continues to drive innovation in products, technologies, and instruments, elevating artistic creativity to new heights. Join this special A3E and MIDI Association session, “Three Masters: One World”, for an in-depth, tactical exploration of how they are leading a global collaboration among musicians from diverse cultures, united by the universal language of music, and presented by these world-class master musicians;

Brian Hardgroove | Musician

Brian Hardgroove is a record producer, bassist, drummer and member (on hiatus) of the legendary hip-hop group Public Enemy. Although he’s done productions that include the extraordinary talents of Chuck D (Public Enemy), Steven Tyler & Joe Perry (Aerosmith), Burning Spear, Marc Anthony, Supertramp and The Fine Arts Militia, his most cherished work is the production of two of China’s premier punk rock bands Demerit and Brain Failure.

Hardgroove’s musical repertoire spans the genres of hip-hop, soul, rhythm and blues, rock, and reggae.

In 2013 Hardgroove joined the faculty of Santa Fe University of Art & Design as Artist in Residence until shortly before the school began closing in 2017. In 2009, Gov. Bill Richardson proclaimed May 11th as “Brian Hardgroove Day” in the state of New Mexico for Hardgroove’s commitment to the regional music scene and for using his position as a touring musician, radio personality, writer, performer, and producer to promote the state in interviews while touring the world with Public Enemy and Bootsy Collins. Santa Fe Mayor David Coss proclaimed June 7 as “Brian Hardgroove Day” in the city of Santa Fe as well.

Hardgroove is currently producing two separate album projects with Stewart Copeland (The Police) and Fred Schneider (The B-52’s) in addition to his role as multi-instrumentalist in Ghost In The Hall (ft. DMC of RUN-DMC).

Bian Liunian | Musician

Musician, composer and musical director of the Beijing 2008 Olympic Closing Ceremony and the CCTV New Year’s Gala, one of the most watched television programs in the world.

Athan Billias | Executive Board | The MIDI Association

Athan Billias has dedicate his whole life to music, technical innovation and MIDI. He played keyboard with Herb Reed and the Platters and Jerry Martini from Sly and the Family Stone. He then moved into the musical instrument industry as Product Planning Manager at Korg where he helped turn the Korg M1 into the largest selling music workstation of all time. He then Joined Yamaha as a Director of Marketing where he was involved with the product planning and marketing of Yamaha products including the Motif Series and the Montage. He has been on the Executive Board of The MIDI Association for over 25 years.

Saturday, January 25th, 2025; 3:00PM - 4:00PM Pacific Time; Hilton Anaheim; 4th Floor, Hilton Huntington Room

Leveraging New Technologies and Practices for Music, Content and Data Asset Management During the Creative Process

Incorporating AI tools for meta-tagging, copyright protection, asset organization, and data security early in the creative process allows musicians and content creators to manage their data more effectively. AI not only improves workflow efficiency but also ensures the long-term protection and traceability of creative assets. By embedding AI in your creative process from the start, you’re safeguarding your work and streamlining collaboration, all while staying aligned with ethical data practices.

Linn Westerberg | Director of Music Distribution | Epidemic Sound

Linn Westerberg is the Director of Music Distribution at Epidemic Sound. She leads a team of problem-solvers and communicators to optimize the streams and revenue for Epidemic Sound’s artists, elevate the music curation in the player based on data, trends, and culture insights, and package and release new music to maximize traction. Prior to Epidemic Sound, Linn helped develop the music programming strategy at Spotify. Linn also has a background in artist management and publishing, working at agencies representing artists such as Labrinth, Paloma Faith, Teddybears, and Taio Cruz. Linn holds a Master’s Degree in Marketing & Media Management from Stockholm School of Economics and a Masters Degree in Arts & Culture Management from Rome Business School.

Connor Cook | Composer

Connor Cook is an interdisciplinary artist - a composer/ multi-instrumentalist/ producer/ musician/ educator based in Los Angeles. She is a 3-time Sundance Fellow, a MacArthur/Sundance Grantee, and has been a part of many high profile music/art programs. Her work has appeared on NBC, Prime, Hallmark, and more. Connor is currently working between music and science with her latest music project where she is working with soil scientists to serialize literal soil data to interpret musically what climate change sounds like. She also works with emerging music technology including generative AI, experimental models, game systems, sample coding, and other audio technologies. She teaches music composition for media at California State University Northridge.

Shauna Krikorian | Executive Vice President | SongHub

Shauna Krikorian has a diverse background in the music industry that spans music publishing, artist development, and working with new technologies to enhance the creator experience. Shauna serves as EVP of SongHub, an innovative blockchain-powered collaboration, rights management and registration platform developed for creators, music publishers, performing rights organizations and music education programs worldwide. An advocate for more transparency within the music industry, Shauna drives SongHub’s mission to help promote the standardization of clean data practices.

As a creative, Shauna has signed and developed artists for synchronization licensing across various visual media including film, TV, ads and promos. She has also spearheaded synch marketing campaigns for many iconic music publishing catalogs (KISS, George Thorogood, Elvis Presley, Charli XCX, Paul Oakenfold and others). Recent projects include Ralph Lauren, Fitbit, Macys, Squishables, Victoria’s Secret, The Good Doctor, Matlock, Yellowjackets, Sweet Tooth, Charmed, and multiple Marvel franchises.

Janishia Jones (Moderator) | Founder / Music Tech Advisor | ENCORE Music Tech Solutions

Janishia Jones is the CEO and Founder of ENCORE Music Tech Solutions, a pioneering consultancy at the intersection of music publishing, technology, and business operations. With over 14 years of experience at companies like Universal Music Group, Kobalt Music Publishing, and EMPIRE, she has driven transformative solutions in rights management and royalties, increasing revenue by 700% at EMPIRE alone. Janishia is also the creator of HRMNY, an innovative platform improving transparency and efficiency in the music industry. A thought leader and advocate for equity, she regularly speaks at global conferences, including SXSW and Canadian Music Week.

ENCORE Music Tech Solutions specializes in building innovative systems to empower artists, rights holders, and music businesses. The company’s flagship technology, HRMNY, provides cutting-edge tools for rights and royalties management, ensuring accuracy, transparency, and efficiency. ENCORE bridges the gap between creative innovation and data-driven business practices, enabling clients to maximize their assets while navigating the complexities of the music industry.

Laura Clapp Davidson | Senior Market Development Manager | Shure Incorporated

Laura Clapp Davidson is a dynamic and accomplished vocalist, songwriter, and Senior Market Development Manager for Shure, a leading manufacturer of high-quality audio equipment. With a career spanning over two decades, Laura has captivated audiences worldwide with her rich, soulful voice and heartfelt performances. An alumna of the Women of NAMM Leadership Summit and an honoree of the She Rocks Awards, she is celebrated for her contributions to the music industry. Laura has played a pivotal role in the development and promotion of cutting-edge music technology products as a respected product specialist and consultant. Her commitment to excellence and unique ability to bridge the gap between artistry and technology have made her an influential and trusted voice in the music community.

Shure: Shure (www.shure.com) has been making people sound extraordinary for a century. Founded in 1925, the Company is a leading global manufacturer of audio equipment known for quality, performance, and durability. We make microphones, wireless microphone systems, in-ear monitors, earphones and headphones, conferencing systems, and more. For critical listening, or high-stakes moments on stage, in the studio, and from the meeting room, you can always rely on Shure. Shure Incorporated is headquartered in Niles, Illinois, in the United States. We have more than 30 manufacturing facilities and regional sales offices throughout the Americas, EMEA, and Asia.

Saturday, January 25th, 2025; 4:00PM - 5:00PM Pacific Time; Hilton Anaheim; 4th Floor, Hilton Huntington Room

A3E Case Study: A Holistic Approach to AI for Music Creation - Why We Can’t Innovate in a Vacuum

Some companies have developed solutions and trained on music without regard for the human creators. Join this A3E discussion to learn why and how the AI For Music initiative (aiformusic.info) was introduced, and why the need to guide AI innovation with policy and governance protecting human creative expression is essential. In this discussion we will hear directly from co-authors of The Principles for Music Creation and supporting brands that understand the importance of aligned innovation.

BT | CEO | SoundLabs

BT is a music industry icon with an impressive 25+ year career as a Grammy-nominated producer, composer, technologist, and songwriter. His pioneering work in Trance and IDM laid the groundwork for modern EDM, and he’s also a classically trained composer and songwriter. BT’s signature plugins like Stutter Edit 1 & 2 for iZotope and Phobos & Polaris with Spitfire Audio have become essential tools for producers worldwide. BT has written, produced, and remixed for top artists such as David Bowie to Madonna, composed for films, and worked on groundbreaking projects like Tomorrowland’s music at Disneyland Shanghai and NFTs in web 3.

SoundLabs is a cutting-edge music production and software company founded by BT, Joshua Dickinson, Dr. Michael Hetrick and Lacy Transeau. With deep roots in the music industry, SoundLabs is poised to revolutionize music production with its next generation tools. This deeply dedicated team of veteran musicians and developers brings unparalleled expertise, having created award-winning plugins, groundbreaking albums, blockbuster film scores, and top-selling sound libraries. Blending human creativity with the vast power of AI, SoundLabs crafts intuitive, inspiring, and ethical tools that transform imagination into reality, redefining what is possible in music production.

Dani Deahl (Moderator) | Head of Communications & Creator Insights | BandLab Technologies

As a prominent figure in music, Dani Deahl has been at the forefront of artist discovery and meaningful industry change for over a decade. She currently is a DJ, producer, public speaker, and Head of Communications & Creator Insights at BandLab. She developed the coverage for the intersection of music and technology for The Verge, helmed YouTube series The Future of Music, and is also Trustee for the Recording Academy Chicago chapter.

BandLab Technologies is a collective of global music technology companies on a mission to break down the technical, geographic, and creative barriers for musicians and fans. Empowering creators at all stages of their creative process, the group's wide range of offerings include flagship mobile-first social music creation platform BandLab, award-winning, legendary desktop DAW Cakewalk, powerful artist services platform ReverbNation and recently acquired global beat and music marketplace Airbit. BandLab Technologies is headquartered in Singapore and is a division of Caldecott Music Group.

Chris Horton | SVP Strategic Technology | Universal Music Group

Chris Horton, SVP Strategic Technology, leads Universal Music Group’s Office of Strategic Technology, which is responsible for the technology aspects of digital partner deals and for long-term strategic technology projects and policy. Chris has worked on UMG’s digital distribution deals for more than 25 years, including the first successful download and subscription services. Chris and his team collaborate with technology companies on the development of new music-related products and services, including high-resolution audio, stem-based formats, music-related AI, web3, AR/VR, anti-piracy and more. He has developed and co-founded various music industry standards and standards bodies, including DDEX. He currently leads UMG's AI Task Force and UMG's AI Review Team. Chris received his undergraduate and M.Eng. degrees in computer science from the Massachusetts Institute of Technology.

Universal Music Group exists to shape culture through the power of artistry. UMG is the world leader in music-based entertainment, with a broad array of businesses engaged in recorded music, music publishing, merchandising, and audiovisual content. Featuring the most comprehensive catalog of recordings and songs across musical genres, UMG identifies and develops artists and produces and distributes the most acclaimed and commercially successful music in the world. Committed to artistry, innovation and entrepreneurship, UMG fosters the development of services, platforms and business models in order to broaden artistic and commercial opportunities for our artists and create new experiences for fans.

Alejandro Koretzky | Head of AI/ML & Audio Science Innovation | Splice

Alejandro Koretzky serves as Head of AI & Audio Science Innovation at Splice. With a rich background as an applied researcher, engineer, product leader, 3X founder, and musician, Alejandro has been pivotal in advancing the use of AI in the creative process of music-making. His leadership and innovations, such as Create by Splice, have been widely recognized in major media and supported by multiple patents. A former Fulbright scholar from Argentina, Alejandro holds an MSc in Electrical Engineering from USC, where he specialized in the intersection of Machine Learning and Signal Processing, and contributed to the earlier generation of stem separation technology. Alejandro enjoys advising startups on early-stage strategy, has served as a Techstars mentor, and is a regular speaker

at notable institutions and events, including NAMM, Berklee College of Music, Audio Engineering Society, Stanford's CCRMA, and others.

Splice helps music creators bring their ideas to life. A subscription to Splice’s vast industry-leading sounds catalog includes high-quality, licensed samples powered by AI to accelerate deep sound discovery and inspiration. The company also provides affordable access to plugins and DAWs through a rent-to-own Gear marketplace. The New York-based startup is led by CEO and technology advocate, Kakul Srivastava.

Paul McCabe | Senior Vice President of Research and Innovation, Roland Future Design Lab | Roland Corporation

Paul McCabe is the senior vice president of research and innovation at Roland Corporation with 32 years of dedicated service. McCabe has excelled in various leadership roles, including VP global marketing and multiple positions at Roland Canada, such as president & CEO, COO, product manager, and marketing communications manager. He co-authored "Principles for Music Creation with AI", a collaboration between Roland and Universal Music Group that underscores the responsible use of AI in the music creative ecosystem. His influence extends beyond this, as he plays a pivotal role in identifying, researching, and

developing concepts around emerging trends in culture, society technology, and creativity for Roland. A dedicated composer and IT enthusiast, McCabe is renowned for his expertise in AI for music and music creation technologies.

For more than 50 years, Roland’s innovative electronic musical instruments and multimedia products have fueled inspiration in artists and creators around the world. Embraced by hobbyists and professionals alike, the company’s trendsetting gear spans multiple categories, from pianos, synthesizers, guitar, drum and percussion, DJ controllers, audio/video solutions, gaming mixers, livestreaming products, and more. As technology evolves, Roland and its expanding family of brands, including BOSS, V-MODA, Drum Workshop (DW), PDP, Latin Percussion (LP), and Slingerland, continue to lead the way for music makers and creators, providing modern solutions and seamless creative workflows between hardware products, computers, and mobile devices.

A3E, A3E Research and A3E Summits + Events operate and conduct their practices as an independent, unbiased, objective and agnostic industry resource, with the primary goal of providing realistic, actionable, practicable business insights, information, as well as educational programming and content.